1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

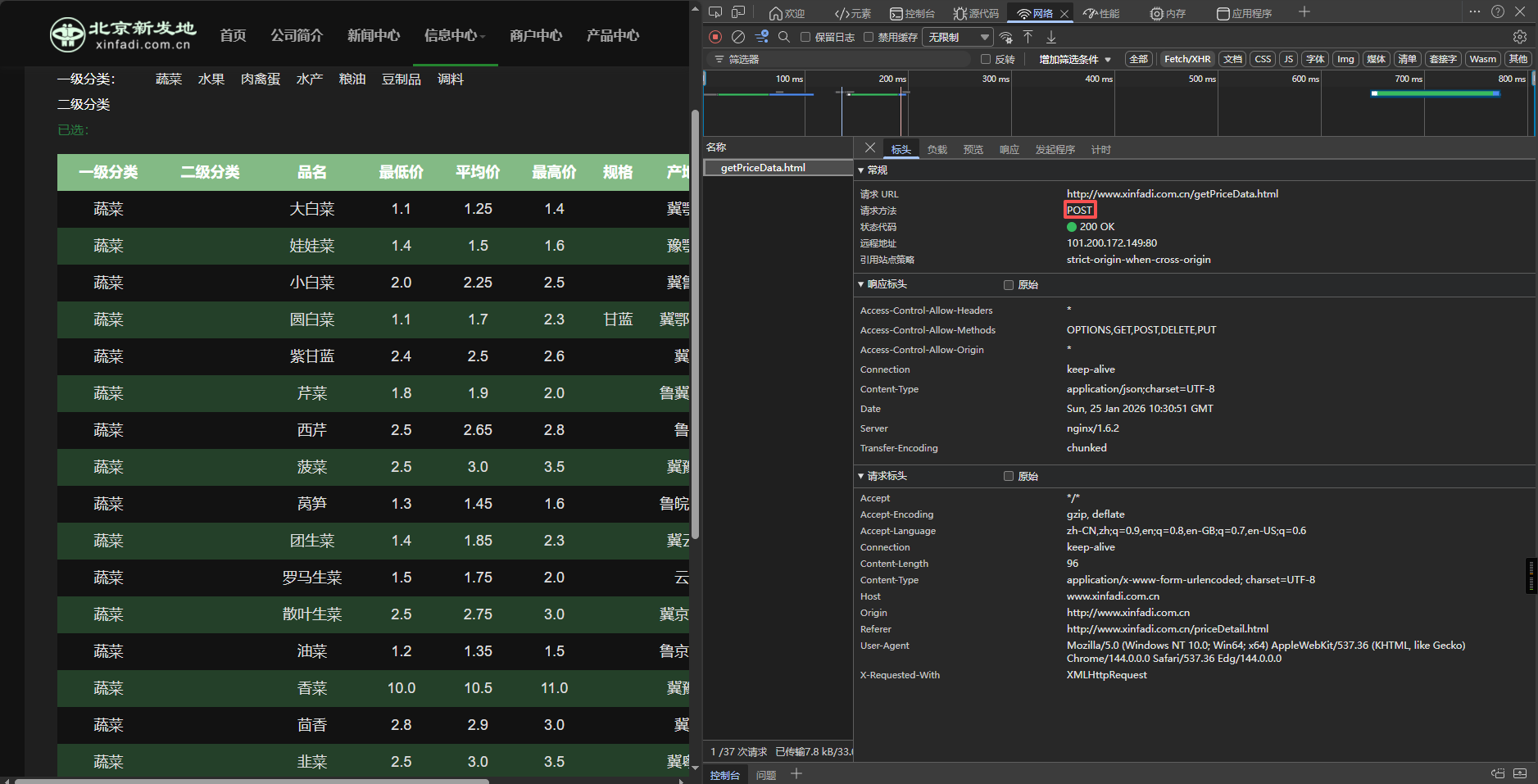

|

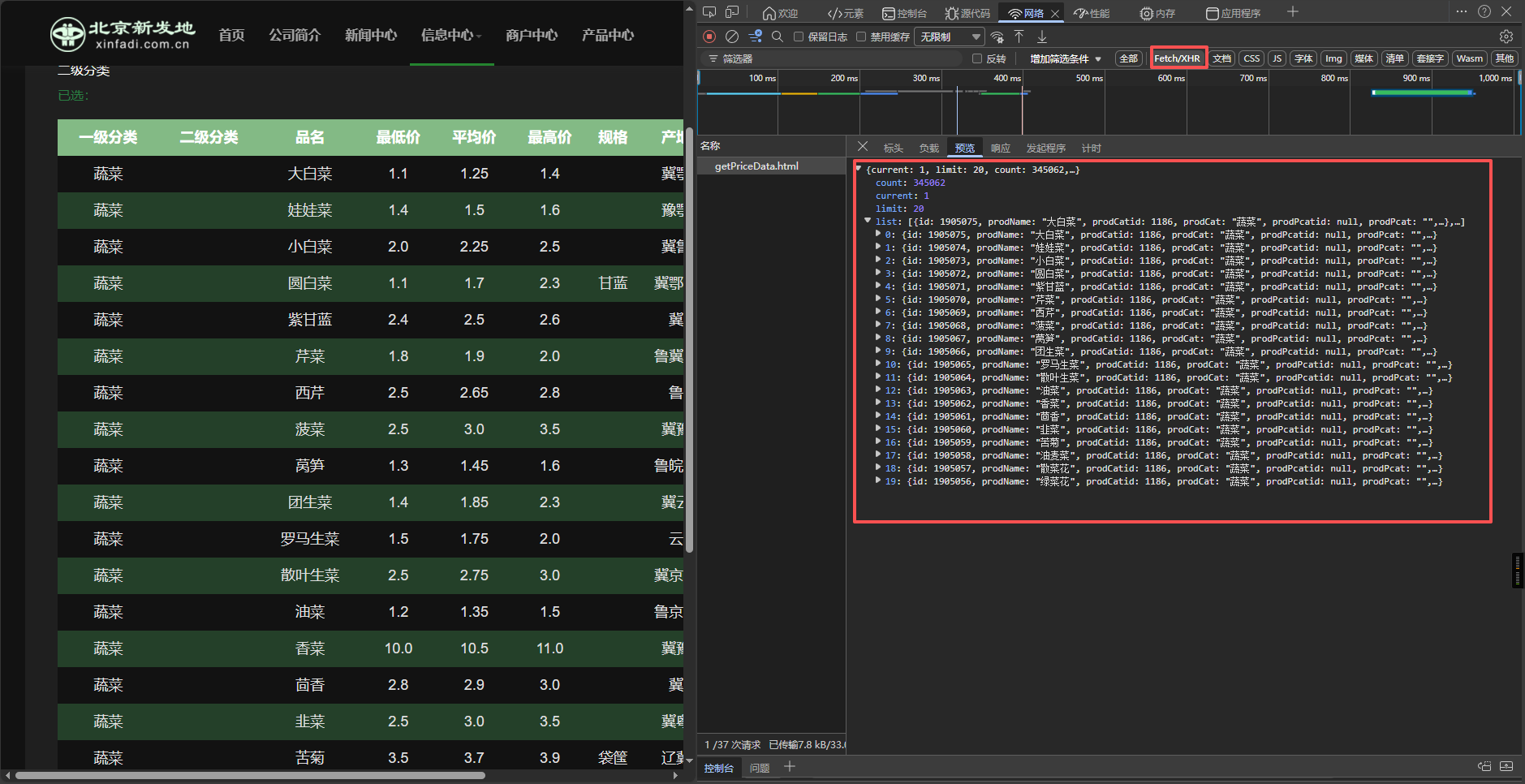

import requests

import csv

import time

url = "http://www.xinfadi.com.cn/getPriceData.html"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36",

"Content-Type": "application/x-www-form-urlencoded"

}

base_data = {

"limit": "20",

"current": "1",

"pubDateStartTime": "2024/01/01",

"pubDateEndTime": "",

"prodPcatid": "",

"prodCatid": "",

"prodName": ""

}

response = requests.post(url, headers=headers, data=base_data)

result = response.json()

total_count = result["count"]

page_size = int(base_data["limit"])

total_pages = (total_count // page_size) + 1

print(f"发现共{total_count}条数据,分{total_pages}页")

all_data = []

for page in range(1, total_pages + 1):

try:

base_data["current"] = str(page)

response = requests.post(url, headers=headers, data=base_data)

response.raise_for_status()

page_result = response.json()

page_data = page_result["list"]

all_data.extend(page_data)

print(f"已爬第{page}/{total_pages}页,累计{len(all_data)}条数据")

time.sleep(0.5)

except Exception as e:

print(f"第{page}页爬取失败:{e}")

continue

csv_headers = [

"一级分类", "二级分类", "品名", "最低价", "平均价",

"最高价", "规格", "产地", "单位", "发布日期"

]

with open("xinfadi.csv", "w", newline="", encoding="utf-8-sig") as f:

writer = csv.DictWriter(f, fieldnames=csv_headers)

writer.writeheader()

for item in all_data:

writer.writerow({

"一级分类": item.get("prodCat", ""),

"二级分类": item.get("prodPcat", ""),

"品名": item.get("prodName", ""),

"最低价": item.get("lowPrice", ""),

"平均价": item.get("avgPrice", ""),

"最高价": item.get("highPrice", ""),

"规格": item.get("specInfo", ""),

"产地": item.get("place", ""),

"单位": item.get("unitInfo", ""),

"发布日期": item.get("pubDate", "")[:10]

})

print(f"全部数据已写入CSV!共{len(all_data)}条")

|